Fraunhofer FOKUS’ AI for Media research team is heavily experienced in realizing data-driven projects for different industries, produces end-to-end solutions and focuses on applicable data science topics.

The most important aspect of data science and engineering is defining valuable research questions that form the foundation for further data analysis. Fraunhofer data scientists help users/customers in understanding the core questions and assist in transferring business requirements into technical approaches. If it so happens that the customer data is readily available, the data itself can then be cleaned, structured, and integrated.

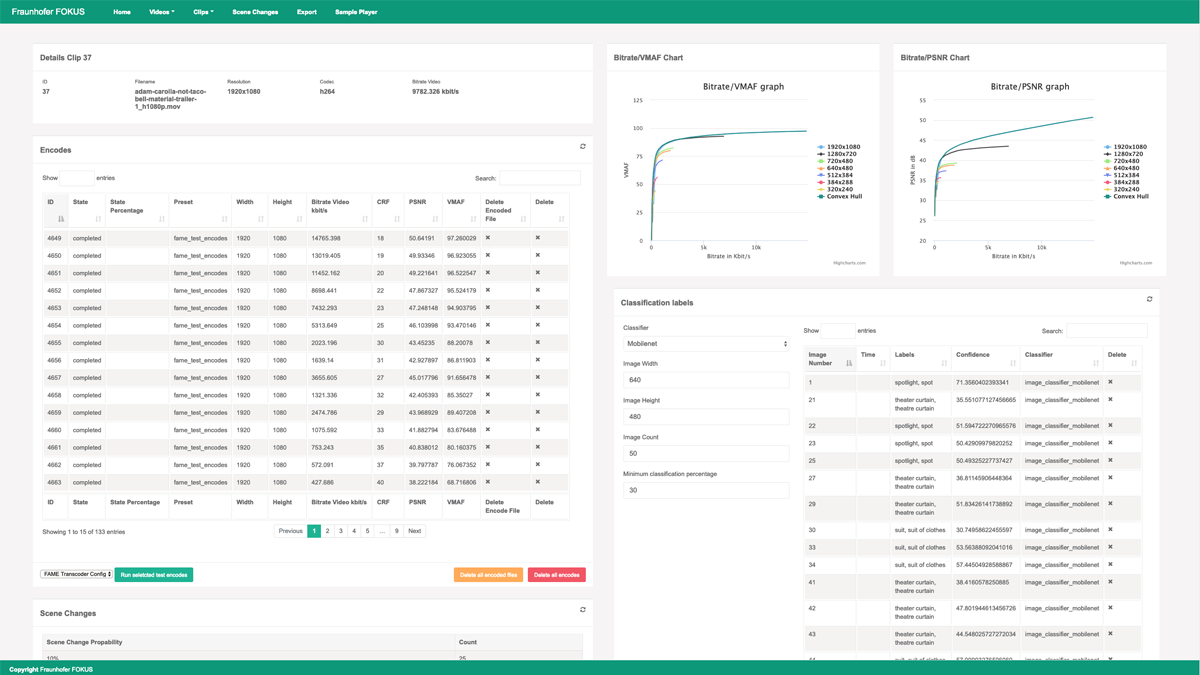

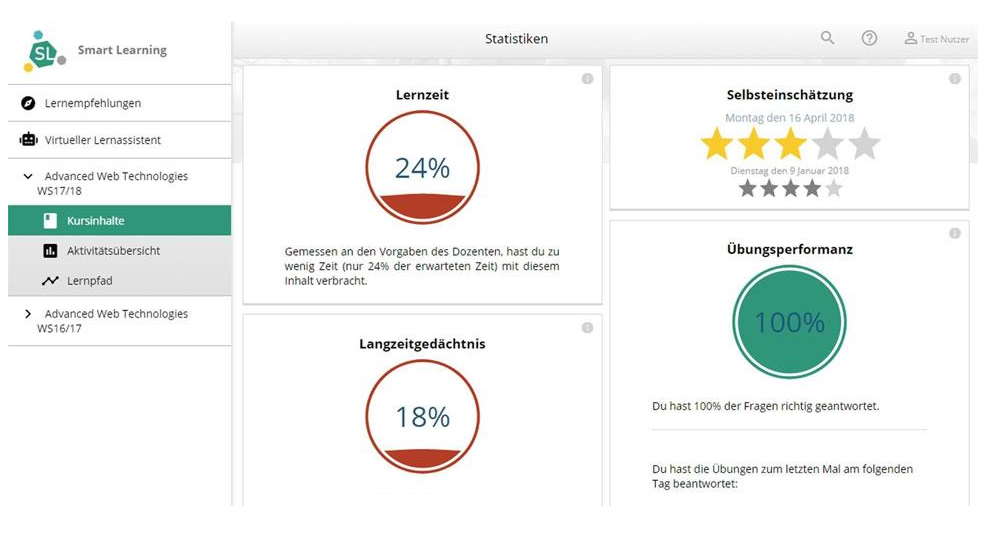

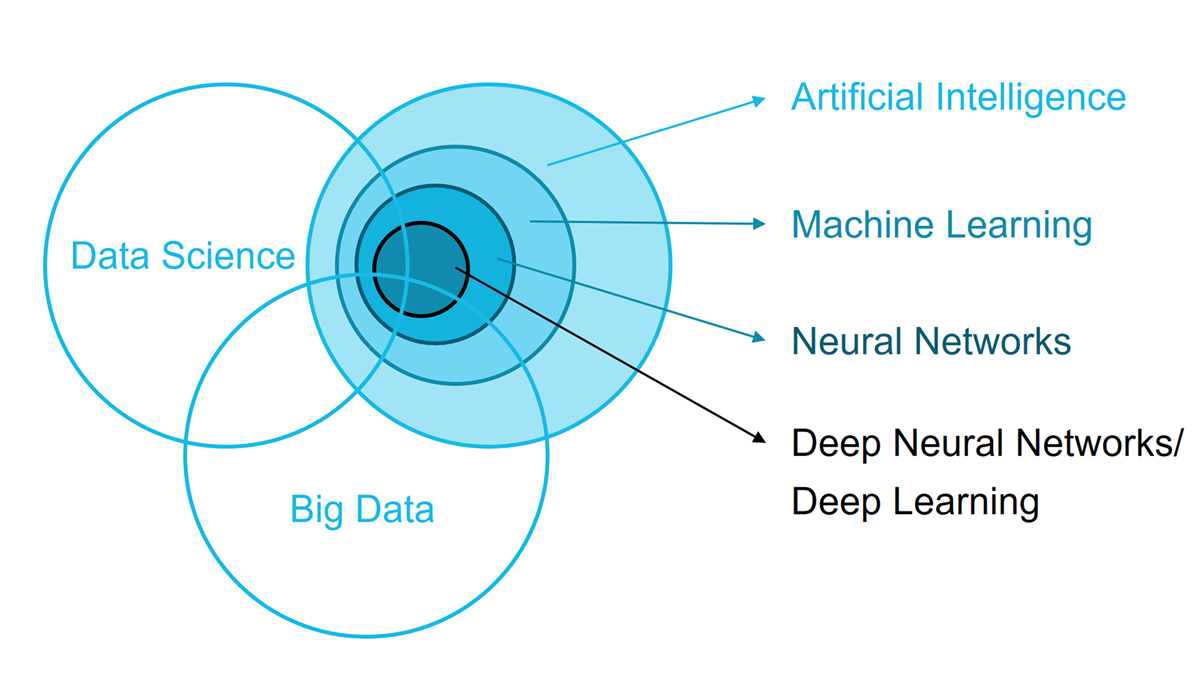

Next, different algorithms can be applied, for example, regression (e.g., adding new numerical data for forecasts) or classification (labeling data points). Therefore, Fraunhofer researchers develop and apply techniques for pattern recognition and prediction tasks through supervised and unsupervised learning through the usage of different flavors of Neural Networks, Support Vector Machines, Decision Trees, Random Forests, Gradient Boosted Decision Trees and many more.

These methods are then used to recognize and learn data patterns, which are then applied to unknown situations.

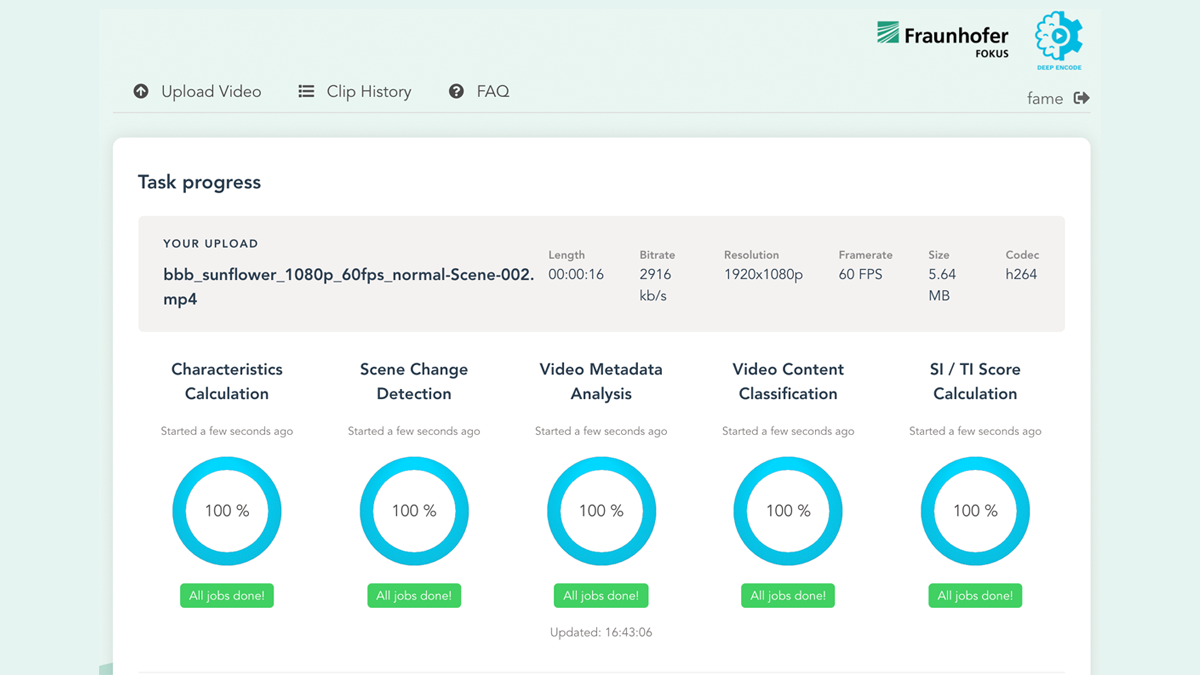

The training and optimization of ML models is enabled through our end-to-end machine learning pipeline, which includes automatic training, hyperparameter tuning and model serving. The pipeline builds on Tensorflow (enabling GPU and TPU usage) and produces accurate and real-time predictions. Results are automatically evaluated with the help of well-specified evaluation frameworks that can be adjusted to the customers’ needs. An evaluation framework defines the way data is fed to the algorithms in a comparable and reliable way, as well as its quality, accuracy and performance.

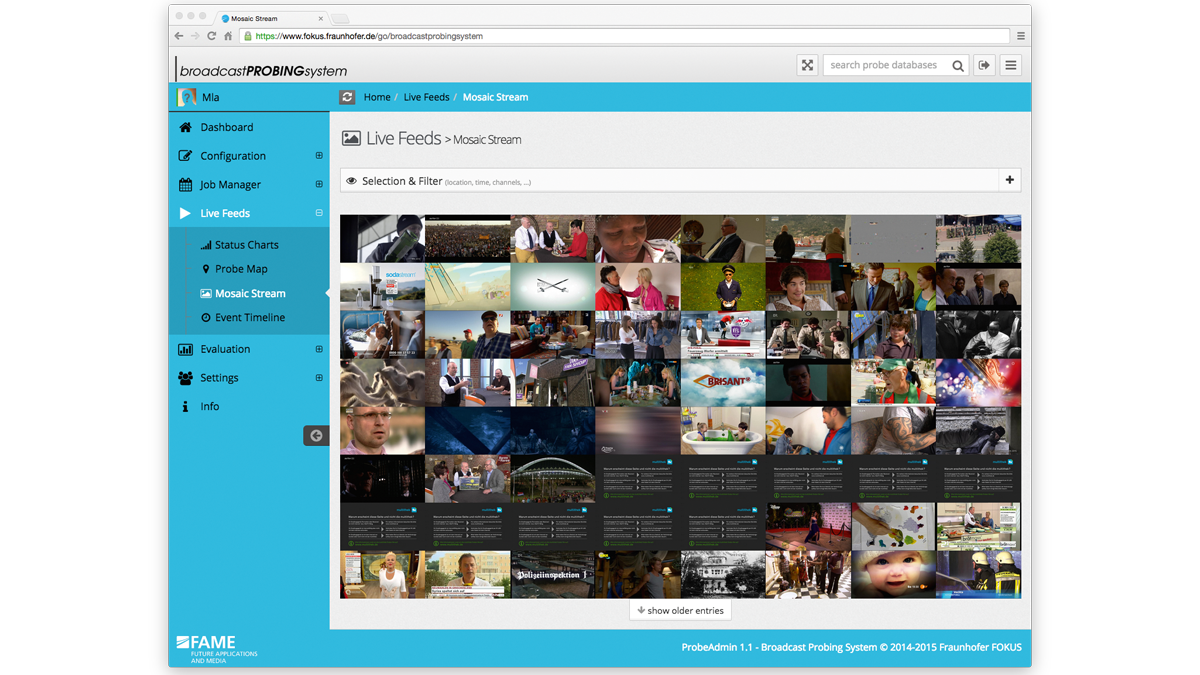

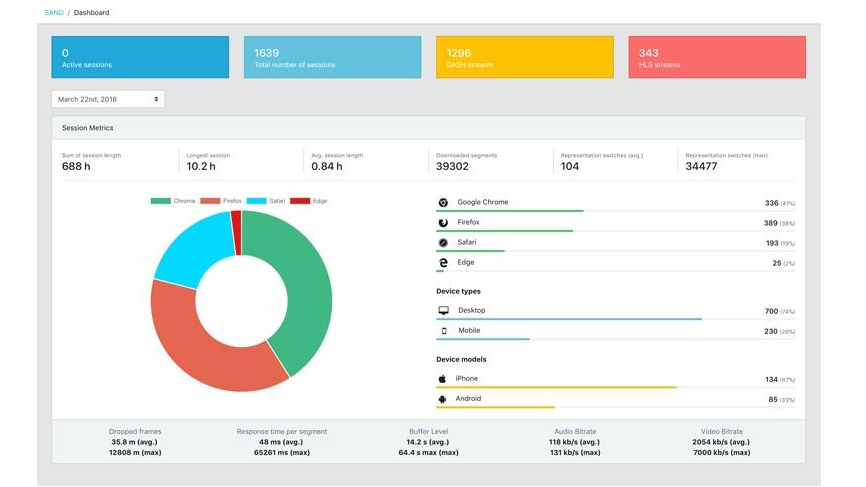

Finally, the results and algorithmic conclusions are presented to various stakeholders. Therefore, the data is first reprocessed, simplified, explained and then visualized to present pertinent results that appropriately answer the research questions – which is quite beneficial for the persons of interest in a given situation.

Fraunhofer FOKUS’ AI for Media research team is heavily experienced in realizing data-driven projects for different industries, produces end-to-end solutions and focuses on applicable data science topics.

Further AI and Machine Learning Activities