Our Gen AI activities can be summarized as follows:

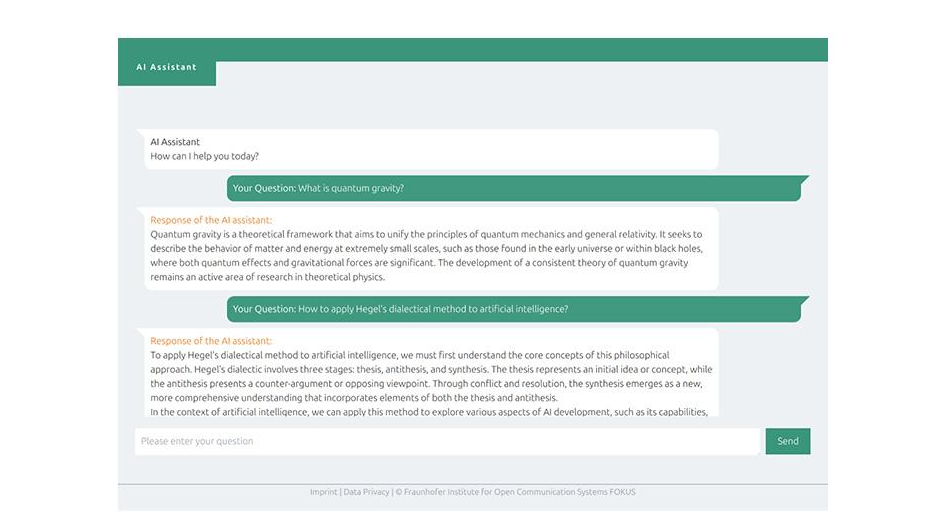

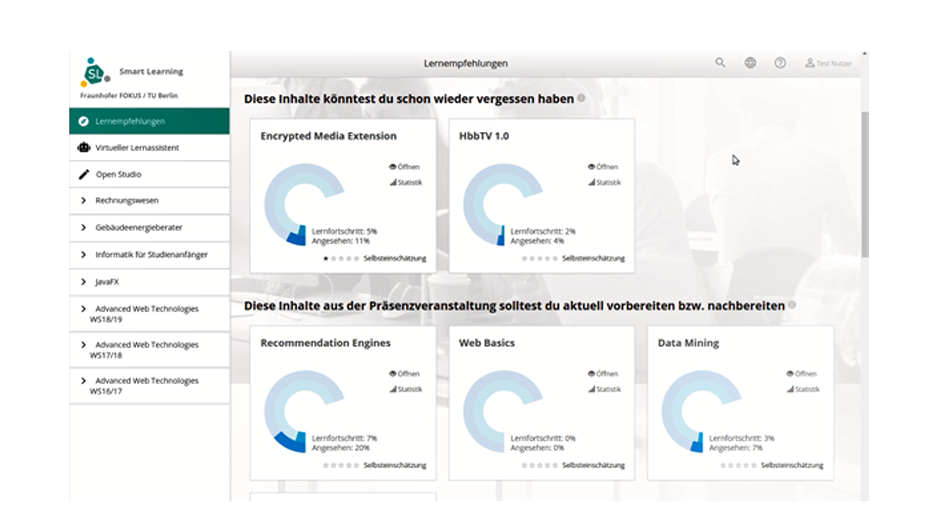

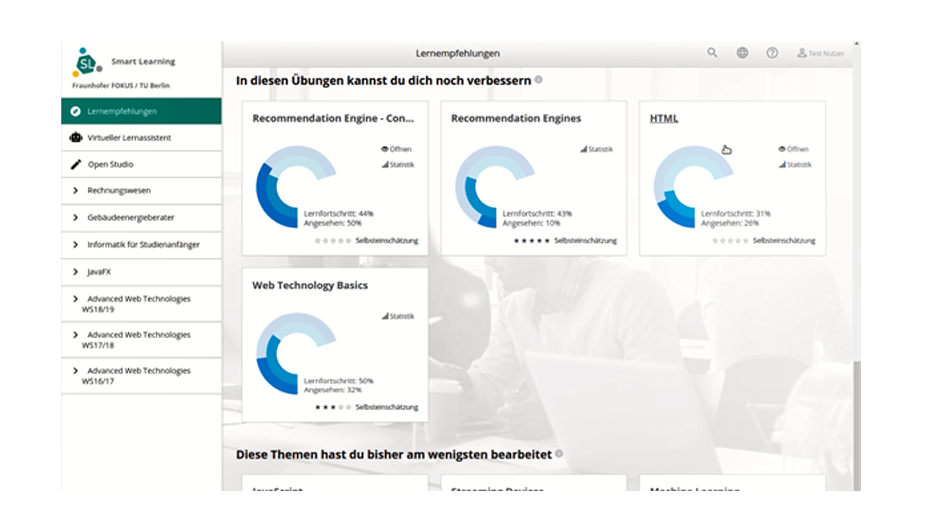

Document-based Chatbots and Semantic Search

Techniques like Retrieval Augmented Generation (RAG) split large documents into small chunks. These chunks are fed into an LLM with the goal of extracting the representation of a textual chunk in a way of how an LLM sees it: As a set of floating-point numbers. Once these representations are extracted, mathematical operations can be performed with them, like comparing the distance between the chunks and the numerical representation of user queries. This way, specific parts of documents can be strategically retrieved to expand the knowledge of an LLM by including these parts in prompts. Which allows for a low threshold interaction with concrete documents like learning material.

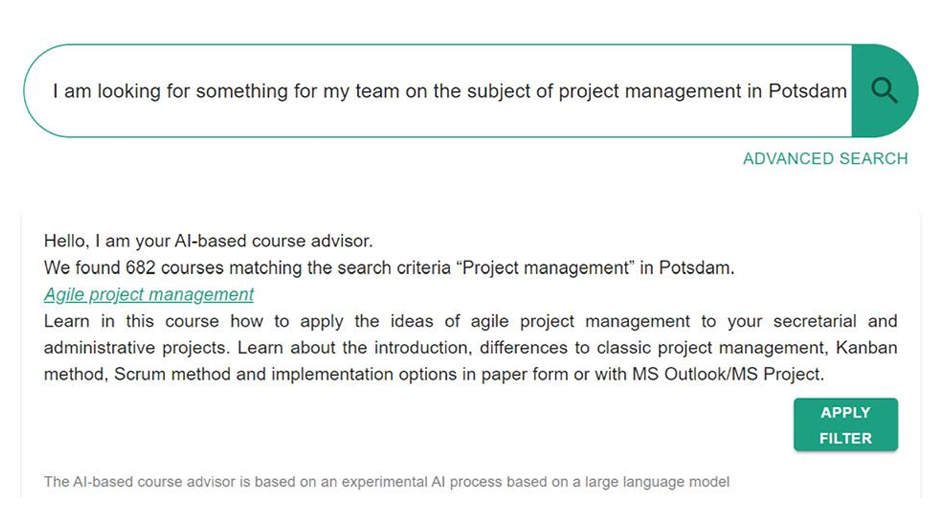

Structuring Unstructured Data with LLMs

While there are many detailed data specifications for specific data within Learning Technologies, the actual information concrete Learning Objects come with can be rather sparse. Additionally, most of the data companies deal with is unstructured, like internal memos, protocols etc. In both cases, LLMs can be utilized to generate structured outputs based on the actual unstructured documents. For example, free-text course descriptions can be annotated with actual skills specified within the ESCO (European Skills, Competences, Qualifications and Occupations) taxonomy and structured outputs that follow data specifications can be generated from natural language user queries to enhance content discovery.

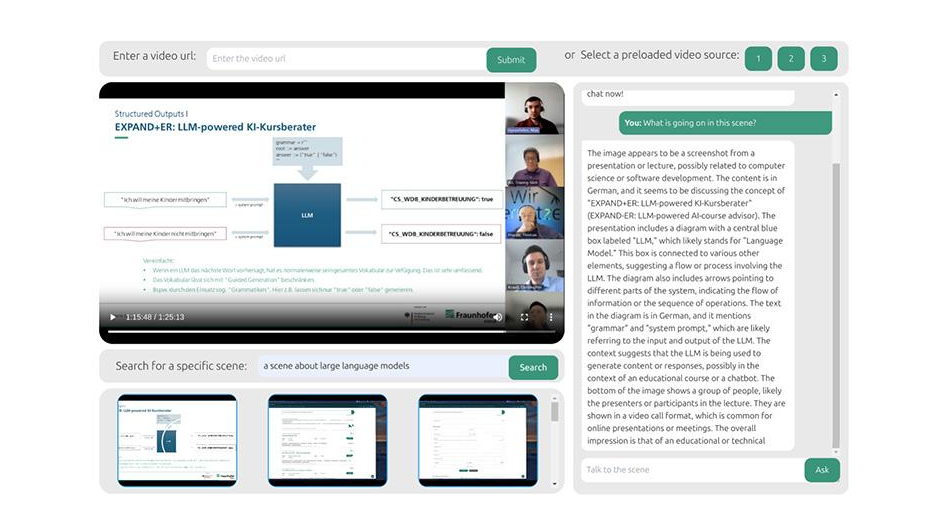

Multi Modal Content Understanding

LLM-based applications can often be expanded by capabilities of dealing with content from other modalities than text. For example, Visual Language Models (VLMs) enable applications to reason with image and video data and thus to apply techniques like Retrieval Augmented Generation (RAG) to collections of visual data. This applies to models dealing with audio data as well, which can also be used as a backbone of powering Speech-To-Text/Text-To-Speech pipelines.